Complete List of Articles:

Working with MySQL and Python, MySQL connector and Python3, Dropping and Truncating a table in MySQL using Pythong, How to insert multiple records to MySQL using Python

Working with MySQL 5.7 and 8.0 using Python 3, Connecting with MySQL using Python, Inserting and Deleting data from MySQL using Python, Data processing with MySQL and Python

hosting Django application on DigitalOcean Server with custom Domain. Using WSGI server to host the Django application. Hosting Django application with Gunicorn, Supervisor, NGINX. Service static and media files of Django application using NGINX. Using Godaddy Domain to server traffic of Django application. Production deployment of Django applications.

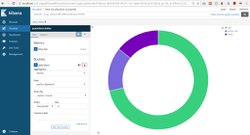

Minimal example of how to create a bar chart or pie chart in Django application using Charts.js. Drawing Analytics charts in Django application. Using charts and graphs to make your Django application visually appealing. Creating Dashboards using Charts.js in Django to convey the information more visually.

Django application with REST API endpoints to automate the WhatsApp messaging. Automating WhatsApp web using selenium to send messages. Using selenium to automate whatsapp messaging. Sending bulk messages via whatsapp using automation. Source code Django application Whatsapp Automation

Automating WhatsApp web using selenium to send messages. Using selenium to automate whatsapp messaging. Sending bulk messages via whatsapp using automation.

Validating image before storing it in database or on file storage. Checking the size of uploaded image in python django. Checking the extension of uploaded image in python django. Checking the content size of uploaded image. Checking the mime-type of uploaded Image. Validating a malicious image before saving it. Using python-magic package

By default, the Kafka client uses a blocking call to push the messages to the Kafka broker. We can use the non-blocking call if application requirements permit. Here we will explore how we can use the non-blocking approach to push data to Kafka, what is the impact, and what could be the concern points and how to take care of them.

Writing a proper git message is a good coding practice. A good commit message should clearly convey what has been changed and why it has been changed. Sometimes developers use an improper message in a hurry to commit and push the changes. Here is a working hook that enforces the developer to use a commit message of 30 characters. you may start including the branch name in the commit message using git hook.

How to revert to the previous kernel version of Ubuntu, Showing grub menu during boot-up process, selecting the desired kernel version from grub menu, permanently selecting the kernel version, updating grub file, Setting timeout in grub menu

How to store the git credentials in git credentials manager, Using GCM to avoid typing the git credentials every time you run a git command, Different ways to store git username and passwords, increase the productivity by not typing git password everytime

The rising popularity of Python, why is python so popular, what is the future of Python, Is python going to be the top choice by 2025,

mini project, python snippet sharing website, Django project free, Final year project free, Python Django project free,

Benefits of using Django and Node.js, Django vs Node.js: Which One Is Better for Web Development?, Basic features of Django and Node.js, Drawbacks of using Django and Node.js

Zen of python, import this, the hidden easter egg with the joke, source code of Zen of python disobey itself

A complete list of Django-Admin commands with a brief description, Django-admin commands list, Cheatsheet Django Admin commands,

QR code image to text generator in Python Django, Text to QR code image generator in Python Django, Implementing QR code generator in Python Django. Serving static files in Django media

In this article, we will see how to integrate the Login with GitHub button on any Django application. We will use plain vanilla GitHub OAuth instead of the famous Django-AllAuth package.

Multiple language support in Django application, translation of Django application, Django admin site translation, Model and field keys language translation in Django application, Translating your Django application to Japanese

Implementing 2FA in Django using Time-based one time password, Enhancing application security using 2FA and TOTP, Generating QR code for Authenticator Applications, Time based 6 digit token for enhanced security, Python code to generate TOTP every 30 seconds, HMAC, TOTP, Django, 2FA, QR Code, Authenticator, Security, Token, Login,

Generating PDF using python reportlab module, Adding table to PDF using Python, Adding Pie Chart to PDF using Python, Generating PDF invoice using Python code, Automating PDF generation using Python reportlab module

In this article, we are accessing Gmail inbox using IMAP library of python, We covered how to generate the password of an App to access the gmail inbox, how to read inbox and different category emails, how to read promotional email, forum email and updates, How to search in email in Spam folder, how to search an email by subject line, how to get header values of an email, how to check DKIM, SPF, and DMARC headers of an email

In this article we will see how to send alerts or messages to microsoft teams channels using connectors or incoming webhook. we used python's requests module to send post request.

Sending email with attachments using Python built-in email module, adding image as attachment in email while sending using Python, Automating email sending process using Python, Automating email attachment using python

Python requests library to send GET and POST requests, Sending query params in Python Requests GET method, Sending JSON object using python requests POST method, checking response headers and response status in python requests library

How to use Jupyter Notebook for practicing python programs, jupyter notebook installation and usage in linux ubuntu 16.04, Writing first program with Jupyter notebook, uploading file in jupyter notebook

Python program to convert Linux file permissions from octal number to rwx string, Linux file conversion python program, Python script to convert Linux file permissions

Performing different file operations in Python, Reading and writing to a file in python, read vs readline vs readlines in python, write vs writelines in python, how to read a file line by line in python, read vs write vs append mode in python file operations, text vs binary read mode in python, solving error can't do nonzero end-relative seeks

There is an option in python where you can execute a function when the interpreter terminates. Here we will see how to use atexit module.

There are a few different formats of the body we can use while sending post requests. In this article, we tried to send post requests to different endpoints of our hello world Django application from the postman. Let's see what is the difference between discussed body formats.

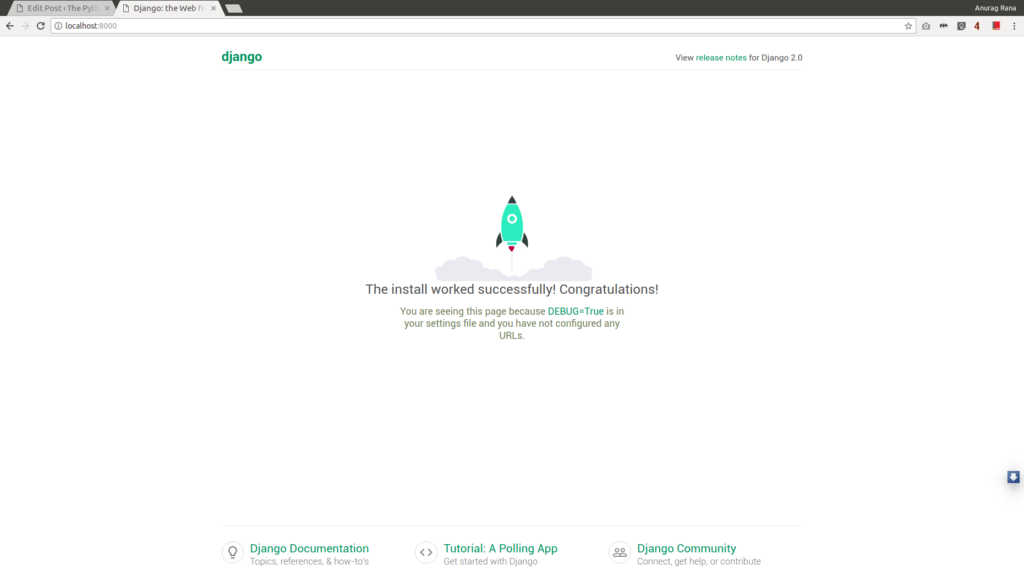

In this article, we will see how to start working with Django 2.2, Step by step guide to install Django inside a virtual environment and starting the application on localhost, Django 2.2 installation, first Django project, hello world in Django 2.2, First Django application

In this article, we will see how to access the query parameters from a request in the Django view, Accessing GET attribute of request, get() vs getlist() method of request in Django, query parameters Django,

This article explains what is ValueError: invalid literal for int() with base 10 and how to avoid it, python error - ValueError: invalid literal for int() with base 10, invalid literal for base 10 error, what is int() function, converting string to integer in python

In this article we are trying to understand what a NoneType object is and why we get python error - TypeError: 'NoneType' object is not iterable, Also we will try different ways to handle or avoid this error, python error NoneType object is not iterable, iterating over a None object safely in python

Compressing an image in Django before storing it to the server, How to upload and compress and image in Django python, Reducing the size of an image in Django, Faster loading of Django template, Solving cannot write mode RGBA as JPEG error,

This article explains the simple steps of uploading and storing an image in Django application, After storing the image, how to use it in Django template or emails, Uploading a file in Django, Storing image in Django model, Uploading and storing the image in Django model, HTML for multipart file upload

This article list out the differences between three major options to deploy Django application, We are briefly comparing the digital ocean, pythonAnyWhere, and AWS EC2 to host the Django application here, AWS EC2 Vs PythonAnyWhere for hosting Django application, hosting Django application for free on AWS EC2, Hosting Django application for free on PythonAnyWhere, comparing EC2 and Pythonanywhere and DigitalOcean

This article explains 3 methods to reset the user password in Django, What command should be used to reset the superuser password from the terminal in Django application, Changing the user password in Django

how to use IF ELSE in Django template, Syntax of IF, ELIF and ELSE in Django, Using filters within IF condition in Django template, Multiple elif (else if) confitions in Django template

Store the result of repetitive python function calls in the cache, Improve python code performance by using lru_cache decorator, caching results of python function, memoization in python

Declaring a new variable in Django template, Set the value of a variable in Django template, using custom template tag in Django, Defining variables in Django template tag

flash messages in Django template, one-time notifications in Django template, messages framework Django, displaying success message in Django, error message display in Django

Using a custom domain for Django app hosted on AWS EC2, GoDaddy DNS with EC2 instance, Django App on EC2 with GoDaddy DNS, Using domain name in Nginx and EC2, Elastic IP and DNS on EC2

AWS EC2 Vs PythonAnyWhere for hosting Django application, hosting Django application for free on AWS EC2, Hosting Django application for free on PythonAnyWhere, comparing ec2 and pythonanywhere

Step by step guide on hosting Django application on AWS ec2 instance, How to host the Django app on AWS ec2 instance from scratch, Django on EC2, Django app hosting on AWS, Free hosting of Django App

improving your python skills, debugging, testing and practice, pypi

The simplest explanation of Decorators in Python, decorator example in python, what is a decorator, simple example decorator, decorator for a newbie, python decorators, changing function behavior without changing content, decorators

rarely used Django template tags, lesser-known Django template tags, 5 awesome Django template tags, Fun with Django template tags,

Solving KeyError in python, How to handle KeyError in python dictionary, Safely accessing and deleting keys from python dictionary, try except Key error in Python

IP laws and coding, patenting code, code copyrights, intellectual property law and code

Python Script to Set bing image of the day as desktop wallpaper, Automating the desktop wallpaper change to bing image of day using python code, changing desktop wallpaper using python, downloading an image using python code, updating the desktop wallpaper daily using python

Django template fiddle, Experimenting with Django templates, Playing with Django template, Django fiddle, template fiddle, djangotemplatefiddle.com,

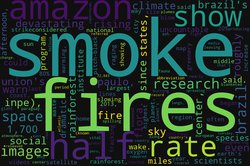

word cloud python, generating a word cloud of a text using python code, python code to generate a word cloud image of a text. Using word frequencies to generate a word cloud image using a python script

XSS attack in Django, preventing cross-site scripting attack in Django website, avoid XSS in Django application, Enabling SECURE_BROWSER_XSS_FILTER in Django website, blocking cross-site scripting attach on Django website

Creating atom or RSS feed of your Django site, Adding syndication feed in your django application, How to add RSS feed in Django website

Creating a sitemap for your Django application, Improve SEO of your Django website by generating Sitemap.xml file, Generate sitemap from Dynamic URLs in Django Application, Create Sitemap for static pages in your Django application, Sitemap XML file in Django Applications,

using for loop in Django templates, Using break in Django template for loop, Using range in django template for loop, How to access index in for loop in django template, for - empty in django template

Adding favicon to Django website, SEO of Django blog, Branding of Django website, How to add a favicon to Django website, Adding shortcut icon, website icon, tab icon, URL icon, or bookmark icon to Django website and blogs

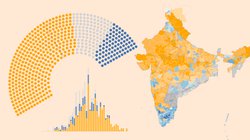

scraping 2019 election data india, Scraping data of 2019 Indian General Election using Python BeautifulSoup and analyzing it, using beautifulsoup to collection election data, using request

display pdf in browser in Django, PDF response in Django, How to display PDF response in Django, Generating PDF from HTML template in Django, Download PDF in Django, Inline pdf display in Django, In browser PDF display in Django, Showing employee payslip in the browser using Django

UnboundLocalError: local variable 'x' referenced before assignment, solved UnboundLocalError in python, reason for UnboundLocalError in python, global vs nonlocal keyword in python, How to solve Python Error - UnboundLocalError: local variable 'x' referenced before assignment, nested scope in python, nonlocal vs global scope in python

Creating a port scanner in 8 lines of python, Python script to scan for open ports, python programm to connect to a host on given port, socket programming example with python, Checking for open ports on a host using python socker programming

Scraping news headlines using python beautifulsoup, web scraping using python, python script to scrape news, web scraping using beautifulsoup, news headlines scraping using python, python programm to get news headlines from web

How to download large csv file in Django, streaming the response, streaming large csv file in django, downloading large data in django without timeout, using django.http.StreamingHttpResponse to stream response in Django, Generating and transmitting large CSV files in django

Text based snake and ladder game in python, play snake and ladder in terminal, terminal based snake and ladder game developed in python, python code for snake and ladder game, python game development snake and ladder,

Logging databases changes in Django Application, Using Django signals to log the changes made to models, Model auditing using Django signals, Creating signals to track and log changes made to database tables, tracking model changes in Django, Change in table Django, Database auditing Django, tracking Django database table editing

Generating ascii art from image, converting colored image to ascii code, python script to convert image to ascii code, python code to generate the ascii image from jpg image.

What can I do with python, what is python used for, What the uses of python programming language, why should I learn python programming language

starting with python programming, how to start learning python programming, novice to expert in python, beginner to advance level in python programming, where to learn python programming, become expert in python programming

Python program to find whether a year is leap year or not, leap year python example, python code to find leap year, python script leap year

python projects for beginners, 25 python django projects to build over the weekend, list of python Django mini projects, Final year project ideas, complete list of python projects for beginners, python projects to start with, learning python by building projects

Uploading files to FTP server using Python, Python script to connect to ftp server, Python code to login to FTP server and upload file, How to connect to FTP server using python code, ftplib in python, Get server file listing using ftplib in python

print statement in Python vs Other programming languages, comparing python simplicity with other programming languages, how to print a string in python, print statement in python, comparing python with other languages, python vs java, python vs c++

posting on facebook page using python script, automating the facebook page posts, python code to post on facebook page, create facebook page post using python script

how to use else clause with try except in python, when to use else clause with try except in python, try except else finally clauses in python, try except else example in python,

Drawing Indian National Flag Tricolor using Python Turtle, Creating In Indian flag using turtle code in python

Python turtle code to create United States of America flag. Drawing USA flag using turtle in python.

How to solve TemplateDoesNotExist error in Django projects, reason for TemplateDoesNotExist error in Django, fixing TemplateDoesNotExist error in Django

email subscription feature in django, sending email subscription confirmation mail in django, sending email unsubscribe email in Django, Add subscription feature in Django

Using PostgreSQL database with python script. Creating Database and tables in PostgreSQL. Insert, update, select and delete Data in PostgreSQL database using python script. Using psycopg package to connecto to PostgreSQL database

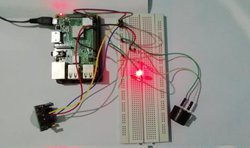

Turn on LED and activate the buzzer when motion is detected using PIR Motion detection sensor. Using PIR motion detection sensor along with buzzer with Raspberry PI. Python Program to detect motion and activating the alarm system using Raspberry Pi.

Starting with Raspberry Pi programming with Python, Controlling LED with Python Program, Blinking LED with Raspberry Pi, Using GPIO pins to control the LED on Raspberry Pi with Python Program, Raspberry Pi and python

How to setup Raspberry Pi, Starting with Raspberry Pi programming with Python, Sending IP address to Telegram channel on Raspberry Pi Boot up, SSH using Raspberry Pi, Raspberry Pi and python

How to setup Raspberry Pi, Installing Raspbian on Raspberry Pi 3 B+ model and configure settings, Starting with Raspberry Pi programming with Python, Configuring WIFI on Raspberry PI, Enabling SSH on Raspberry PI, RaspberryPi and Python

How to setup Raspberry Pi, Installing Raspbian on Raspberry Pi 3 B+ model and configure settings, Starting with Raspberry Pi programming with Python, Raspberry Pi and Python

understanding iterators and generators in python, creating python generators, using generators and iterators in python code

top 10 python programming books, List of python books to start with, Start with a python book collection, Buy best python books, Top and best python books,

How to encrypt and decrypt the content in Django, Encrypting the critical information in Django App, Encrypting username, email and password in Django, Django security

Python code to send free emails using Gmail credentials, Sending automated emails using python and Gmail, Using Google SMTP server to send emails using python. Python script to automate gmail sending emails, automating email sending using gmail

How to track email opens. Tracking email sent from django app. Finding the email open rate in Python Django. Email behaviour of users in Python Django. Finding when email is opened by user in python-django.

how to generate public URL to expose your local Django webserver over the Internet. How to let everyone access your Django project running on localhost.

Most common mistakes made by beginner python programmers, Things to avoid by python programmers, Most common pitfalls/ gotchas in python, Bitten by python scenarios, tips for beginner python programmers, python beginner programmers should avoud these mistakes

How to upload and process the content of excel file in django without storing the file on server. Process Excel file content in Django. Uploading and reading Excel file content in Django 2.0 without storing it on server.

Creating sitemap for your Django application, Improve SEO of your Django website by generating Sitemap.xml file, Generate sitemap from Dynamic URLs in Django Application, Create Sitemap for static pages in your Django application, Sitemap xml file in Django Applications,

Adding robots.txt file in your Django application, Easiest way to add robots.txt file in Django, Django application robots.txt file, Why should you add robots.txt file in your Django Application,

Validating json using python code, format and beautify json file using python, validate json file using python, how to validate, format and beautify json

How to create custom 404 error page in Django, Handler404 example Django 2.0, Page not found error in Django 2.0, Custom Error handlers in Django 2.0

Collecting one million website links by scraping using requests and BeautifulSoup in Python. Python script to collect one million website urls, Using beautifulsoup to scrape data, Web scraping using python, web scraping using beautifulsoup, link collection using python beautifulsoup

comparing the performance of docker cluster, multi-threaded approach and scrapy framework to send 1000 requests and processing response.

Finding linux system information like processor details, memory usage and average load using python script. Python program to find Ubuntu information.

Scrapping tweets using BeautifulSoup and requests in python. Downloading tweets without Twitter API. Fetching tweets using python script by parsing HTML.

Scraping large amount of tweets within minutes using celery and python, RabbitMQ and docker cluster with Python, Scraping huge data quickly using docker cluster with TOR, using rotating proxy in python, using celery rabbitmq and docker cluster in python to scrape data, Using TOR with Python

Using docker instead of virtual environment for Django app development, starting with Dockerization of your Django application. Django with Docker.

Validating credit card number using Luhn' Algorithm, Verifying Credit and Debit card using python script, Python code to validate the credit card number, Luhn' algorithm implementation in Python

Scraping Python books data from Amazon using scrapy framework. Bypassing 503 error in scrapy. BeautifulSoup vs Scrapy. Scrapy Data Download.

Creating PDF documents in Python and Django, Returning PDF as response in Django, Generating PDF from HTML template in Django, Using pdfkit and wkhtmltopdf in python and django

Using Django signals to log the changes made to models, Model auditing using Django signals, Creating signals to track and log changes made to models.

Download all instagram images for any user using this python package.

Creating access logs in Django application, Logging using middleware in Django app, Creating custom middleware in Django, Server access logging in Django, Server Access Logging in Django using middleware

Solving Django error 'NoReverseMatch at' URL with arguments '()' and keyword arguments '{}' not found, URL not found in Django, No reverse match in Django template error

A complete guide to start with virtual environments in python. How to install virtual environments. How to create a new virtual environment. Benefits of using virtual environment. docker vs virtual environment

Getting and displaying the latest bitcoin and other crypto currencies prices using python Django. Complete step to step guide to develop a django project to fetch bitcoin prices. Bitcoin prices in python.

Python script to wish merry Christmas using python turtle. Using Python Turtle module to wish merry Christmas to your friends.

How to create reusable django app which can block crawling IPs from accessing your app. Creating distributable python package and upload on pypi.

Scheduling the database backup in Django, script to the backup database periodically in Django app, Delete old backup files periodically in Django app, PythonAnyWhere server database backup in Django,

Using MongoDB in a Django Project with the help of MongoEngine. Non relational schema in Django project. Using nosql database in Django application. MongoDB with python Django

How to find most popular technology on stackoverflow by crawling the stackoverflow site using python. Using python beautifulsoup to crawl web pages on stackoverflow. Python code to crawl stackoverflow, crawling stackoverflow for tags, python script to fetch data from stackoverflow.

Elastic search and kibana and Django. Fast free indexing in elastic search. Indexing Django project data in elastic search and using kibana.

Using elastic search in django projects with the help of kibana. setting up elastic search with kibana and Django. Using indexed searching using elastic.

Opening top 10 google search results in different tabs in one click. How to start multiple tabs with different URLs in one go.

Creating a completely automated telegram channel to generate and post content using python code on regular basis. Automating the Telegram channel using python script

How to automate the process of updating website hosted on python any where server everytime you commit and push code to git repository.

Difference between tuples and lists, raw_input and input, shallow and deep copy, args and kwargs and other different terms explained in python

Step by step guide on hosting Django application on AWS ec2 instance, How to host the Django app on AWS ec2 instance from scratch, Django on EC2, Django app hosting on AWS, Free hosting of Django App

Step by step guide on hosting Django application on AWS ec2 instance, How to host the Django app on AWS ec2 instance from scratch, Django on EC2, Django app hosting on AWS, Free hosting of Django App

How to integrate PayUMoney payment gateway in your Django app in easy steps. Step by step guide for payment gateway integration.

Website crawling for email address, web scraping for emails, data scraping and fetching email adress, python code to scrape all emails froma websites, automating the email id scraping using python script, collect emails using python script

Python script to convert the ebooks from one format to another in bulk, Automated book conversion to kindle format, Free kindle ebook format conversion, automating the book format conversion, python code to book format convert,

Which is the best server for hosting Django Apps, Best hosting provider for Django Apps, Cheapest Django Hosting, PythonAnyWhere Reviews, Django Hosting,

How to download excel file in django, download data csv and excel file in django, excel file in python, csv and excel file in django python, download excel python-Django

How to use Google reCAPTCHA in Django, Preventing login attack in Django using captcha, Preventing multiple login attempts on login page in Django application using captcha, Integrating Google reCAPTCHA in Django Template,

How to share large files between two system using python simple HTTP server. share movies using python server. transfer data without pen drive using python.

List of USA states in different Python formats to be used in Django project, Comma separated Values CSV USA states, Python List & Set Django model choice format,

List on Indian states in different format to be used in python and Django projects. Indian states in CSV, list, set, numbered list and HTML code format.

How to send bulk emails for free using Mailgun and Python-Django, How to send free promotional emails from your Django application. Free email Api.

How to use AJAX in Django projects?, Checking username availability without submitting form, Making AJAX calls from Django code, loading data without refreshig page in django templates, AJAX and Django,

What is zen of python. What is the meaning of zen of python. Explanation of zen of python terms with example. Easter egg Zen of python. import this.

Scheduling task on pythonanywhere server. Step by step process to add cron task on pythonanywhere server free account. Django command scheduling.

creating custom management commands in Django application, Background tasks in Django App, Scheduled tasks in Django, How to schedule a task in Django application, How to create and schedule a cron in Django

How to debug Django code in production environment? How to start logging errors in log files in django. Error loggers in django web application.

Custom template tags in Django, creating new template tags in Django, Step by step guide to create and use custom template tags in Django, how to create custom template tags in Django, how to use custom template tag in Django,

How to create your own awesome 404 error or 500 error page in django. Step by step guide to help you design your own 404 not found error page in Django. Design your own 500 internal server error page in Django application

How to send an email via office 365 in python and Django, Automating the email sending process using Django, Office 365 credentials to send email using Django, Python script to send emails via office 365, Automating office 365 using python, Python script to send emails via office365

How to upload and process the content of csv file in django without storing the file on server. Process CSV content in Django. Uploading and reading csv.

How to extend the default user model in Django, Defining your own custom user model in Django. Writing your own authentication backend in Django, Using Email and Password to login in Django

Creating a hello world Django app, Starting the development of Django application in less than 5 minutes, How to start development in python Django, your first application in Django

How to host any python-Django app on pythonanywhere server for free, Best hosting service provider for python-Django apps, Free Django app hosting, Easiest Hosting for Django, Django hosting for free, Cheapest Django Hosting