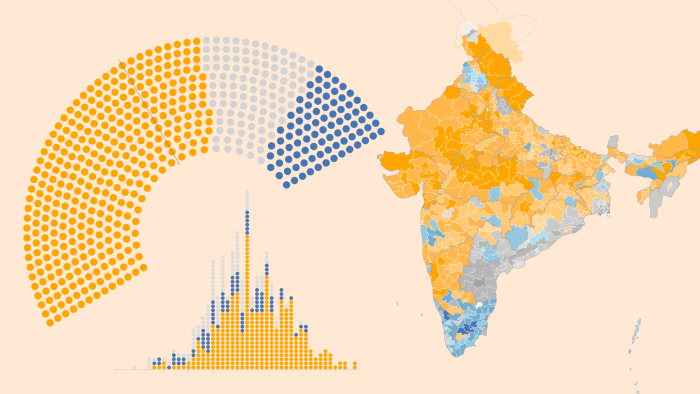

Result of 2019 Indian General Election came out on 23rd May 2019 which can be viewed on the official website of election commission of India.

In this article, we scraped the data for each constituency and dumped it into a JSON file to analyze further.

EC website has data for each constituency. To get stats for a particular constituency, we need to first select the state from the dropdown and then constituency from another dropdown which is populated based on the state selected. There are 543 constituencies. If we collect the data manually, we have to select values from dropdown 543 times. But,

That is when Python comes into the picture.

Creating URL:

The result home page URL for constituency wise data is http://results.eci.gov.in/pc/en/constituencywise/ConstituencywiseU011.htm

When you change the dropdown value to another state or another constituency, the web page is reloaded and URL is updated. Try changing the state and constituency few times and you will find a pattern in webpage URL.

URL is created using below logic.

Base URL i.e. http://results.eci.gov.in/pc/en/constituencywise/Constituencywise + U char for Union territories or S for state + state code 01 to 29 + constitutency code starting from 1 + .htm?ac= + constituency code

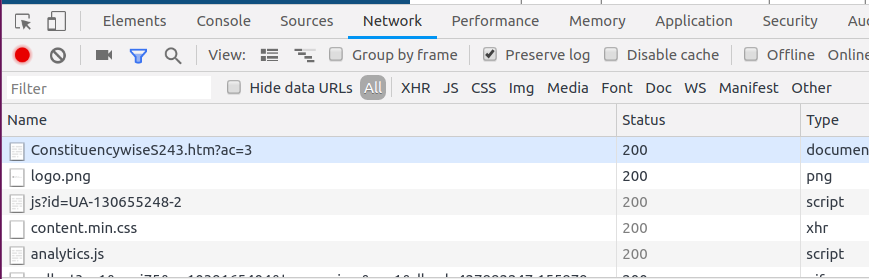

So for example if you want to get the data of Muzaffarnagar constituency in Uttar Pradesh state, URL will change to http://results.eci.gov.in/pc/en/constituencywise/ConstituencywiseS243.htm?ac=3 where S means State (not union territories), 24 is code for Uttar Pradesh and 3 is code for Muzaffarnagar constituency.

Also, you will notice that if you pass the wrong state or constituency code, 404 status is returned.

Checking response:

Press F12 in your chrome browser to open the Chrome Dev tools. Select the network tab, check the preserve log check box. When you refresh the page or change the constituency, a new GET request is sent to the server.

The response of this request is pure HTML. To parse the response and to fetch the required data, inspect the webpage. You will see that results are enclosed inside the tbody tag. But there are multiple tbody tags in HTML source code and none of them have any unique attribute like id or other attributes like class.

Hence we will check the index of this tbody tag from the top and then will fetch data from it.

Code time:

Let's start writing code to fetch the data.

First, create a directory inside which we will place our files. Now change the working directory to the newly created directory and create a virtual environment using Python3. Using a virtual environment while working with Python is recommended.

Activate the virtual environment. Install the dependencies from the requirements.txt file using below command.

pip install -r requirements.txt

Create a new python file election_data_collection.py.

Import the required packages

import requests

from bs4 import BeautifulSoup

import json

from lxml import html

import time

Create a list of states code.

states = ["U0" + str(u) for u in range(1, 8)] + ["S0" + str(s) if s < 10 else "S" + str(s) for s in range(1, 30)]

Every state has different number constituencies, we will loop up to the maximum number of constituency any state has. For now, this number is less than 100. We will terminate the loop for each state when we get 404 of any constituency.

base_url = "http://results.eci.gov.in/pc/en/constituencywise/Constituencywise"

results = list()

for state in states:

for constituency_code in range(1, 99):

url = base_url + state + str(constituency_code) + ".htm?ac=" + str(constituency_code)

# print("URL", url)

response = requests.get(url)

Parse the response and fetch the required tbody inside which all the result data is enclosed.

response_text = response.text

soup = BeautifulSoup(response_text, 'lxml')

tbodies = list(soup.find_all("tbody"))

# 11the tbody from top is the table we need to parse

tbody = tbodies[10]

For each row in tbody, collect the candidate's name and votes count and dump into a dictionary. Repeat this for each constituency. Once all the data is collected, dump it into a JSON file to analyze further.

# write data to file

with open("election_data.json", "a+") as f:

f.write(json.dumps(results, indent=2))

Important Note:

Please do not flood the server with too many simultaneous requests. Always send one request at a time. Put some sleep between requests. I have added 0.5 seconds sleep between each request.

Full Code:

"""

Author: Anurag Rana

Site: https://www.pythoncircle.com

"""

import requests

from bs4 import BeautifulSoup

import json

from lxml import html

import time

NOT_FOUND = 404

states = ["U0" + str(u) for u in range(1, 8)] + ["S0" + str(s) if s < 10 else "S" + str(s) for s in range(1, 30)]

# base_url = "http://results.eci.gov.in/pc/en/constituencywise/ConstituencywiseS2910.htm?ac=10"

base_url = "http://results.eci.gov.in/pc/en/constituencywise/Constituencywise"

results = list()

for state in states:

for constituency_code in range(1, 99):

url = base_url + state + str(constituency_code) + ".htm?ac=" + str(constituency_code)

# print("URL", url)

response = requests.get(url)

# if some state and constituency combination do not exists, 404, continue for next state

if NOT_FOUND == response.status_code:

break

response_text = response.text

soup = BeautifulSoup(response_text, 'lxml')

tbodies = list(soup.find_all("tbody"))

# 11the tbody from top is the table we need to parse

tbody = tbodies[10]

trs = list(tbody.find_all('tr'))

# all data for a constituency seat is stored in this dictionary

seat = dict()

seat["candidates"] = list()

for tr_index, tr in enumerate(trs):

# row at index 0 contains name of constituency

if tr_index == 0:

state_and_constituency = tr.find('th').text.strip().split("-")

seat["state"] = state_and_constituency[0].strip().lower()

seat["constituency"] = state_and_constituency[1].strip().lower()

continue

# first and second rows contains headers, ignore

if tr_index in [1, 2]:

continue

# for rest of the rows, get data

tds = list(tr.find_all('td'))

candidate = dict()

# if this is last row get total votes for this seat

if tds[1].text.strip().lower() == "total":

seat["evm_total"] = int(tds[3].text.strip())

if "jammu & kashmir" == seat["state"]:

seat["migrant_total"] = int(tds[4].text.strip())

seat["post_total"] = int(tds[5].text.strip())

seat["total"] = int(tds[6].text.strip())

else:

seat["post_total"] = int(tds[4].text.strip())

seat["total"] = int(tds[5].text.strip())

continue

else:

candidate["candidate_name"] = tds[1].text.strip().lower()

candidate["party_name"] = tds[2].text.strip().lower()

candidate["evm_votes"] = int(tds[3].text.strip().lower())

if "jammu & kashmir" == seat["state"]:

candidate["migrant_votes"] = int(tds[4].text.strip().lower())

candidate["post_votes"] = int(tds[5].text.strip().lower())

candidate["total_votes"] = int(tds[6].text.strip().lower())

candidate["share"] = float(tds[7].text.strip().lower())

else:

candidate["post_votes"] = int(tds[4].text.strip().lower())

candidate["total_votes"] = int(tds[5].text.strip().lower())

candidate["share"] = float(tds[6].text.strip().lower())

seat["candidates"].append(candidate)

# print(json.dumps(seat, indent=2))

results.append(seat)

print("Collected data for", seat["state"], state, seat["constituency"], constituency_code, len(results))

# Do not send too many hits to server. be gentle. wait.

time.sleep(0.5)

# write data to file

with open("election_data.json", "a+") as f:

f.write(json.dumps(results, indent=2))

Code is available on Github.

Running code:

Make sure the virtual environment is active before running the code.

# to collect the data (venv) $ python election_data_collection.py # to analyze the data (venv) $ python election_data_analysis.py

It took me around 7 minutes to collect all the data for 543 constituencies with 0.5 seconds delay between each request.

Analyzing the data:

I have created a few sample functions to analyze the data which are in separate file election_data_analysis.py.

Since we have data with us already in the JSON file, we can use it instead of scraping the site again and again.

To analyze the data, load the content of the election_data.json file into a JSON object.

import json

with open("election_data.json", "r") as f:

data = f.read()

data = json.loads(data)

To get the highest number of votes any candidate got, use below function.

def candidate_highest_votes():

highest_votes = 0

candidate_name = None

constituency_name = None

for constituency in data:

for candidate in constituency["candidates"]:

if candidate["total_votes"] > highest_votes:

candidate_name = candidate["candidate_name"]

constituency_name = constituency["constituency"]

highest_votes = candidate["total_votes"]

print("Highest votes:", candidate_name, "from", constituency_name, "got", highest_votes, "votes")

To get the number of NOTA votes, use below function.

def nota_votes():

nota_votes_count = sum(

[candidate["total_votes"] for constituency in data for candidate in constituency["candidates"] if

candidate["candidate_name"] == "nota"])

print("NOTA votes casted:", nota_votes_count)

Code is available on GitHub. Feel free to fork it, rewrite it or optimize it.

You may add new features, analyzing points or visualization using graphs, pie charts or bar diagrams.

Host your Django App for free.